from bs4 import BeautifulSoup

import requests

from urllib.parse import urljoin

url = 'http://www.runoob.com/python/python-100-examples.html'

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.79 Safari/537.36'} #反爬机制,其实没用上。。。

r = requests.get(url, headers = headers).content.decode('utf-8')

soup = BeautifulSoup(r, 'lxml')

re_a = soup.find(id = 'content').ul.find_all('a')

list = []

for i in re_a:

list.append(i['href']) #收集100个url

data = []

for x in list:

dict = {}

test = requests.get('http://www.runoob.com' + x, headers = headers).content.decode('utf-8')

soup_test = BeautifulSoup(test, 'html.parser')

dict['title'] = soup_test.find(id = 'content').h1.text #分析html结构

dict['problem'] = soup_test.find(id = 'content').find_all('p')[1].text

dict['analyse'] = soup_test.find(id = 'content').find_all('p')[2].text

try:

dict['code'] = soup_test.find(class_="hl-main").text

except Exception as e:

dict['code'] = soup_test.find('pre').text

with open('100-py.csv', 'a+', encoding = 'utf-8') as file: #文件保存

file.write(dict['title'] + '\n')

file.write(dict['problem'] + '\n')

file.write(dict['analyse'] + '\n')

file.write(dict['code'] + '\n')

file.write('-' * 50 + '\n')

file.write('\n')

print(dict['title'])

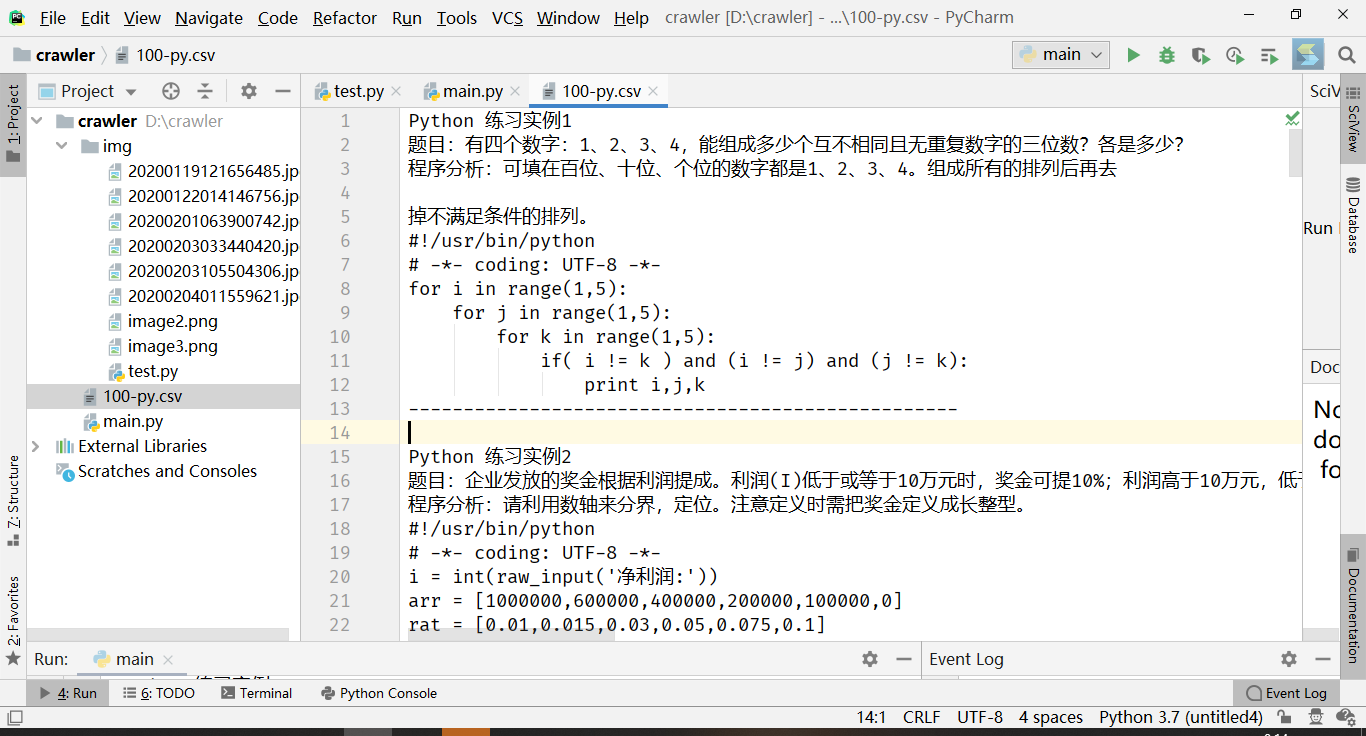

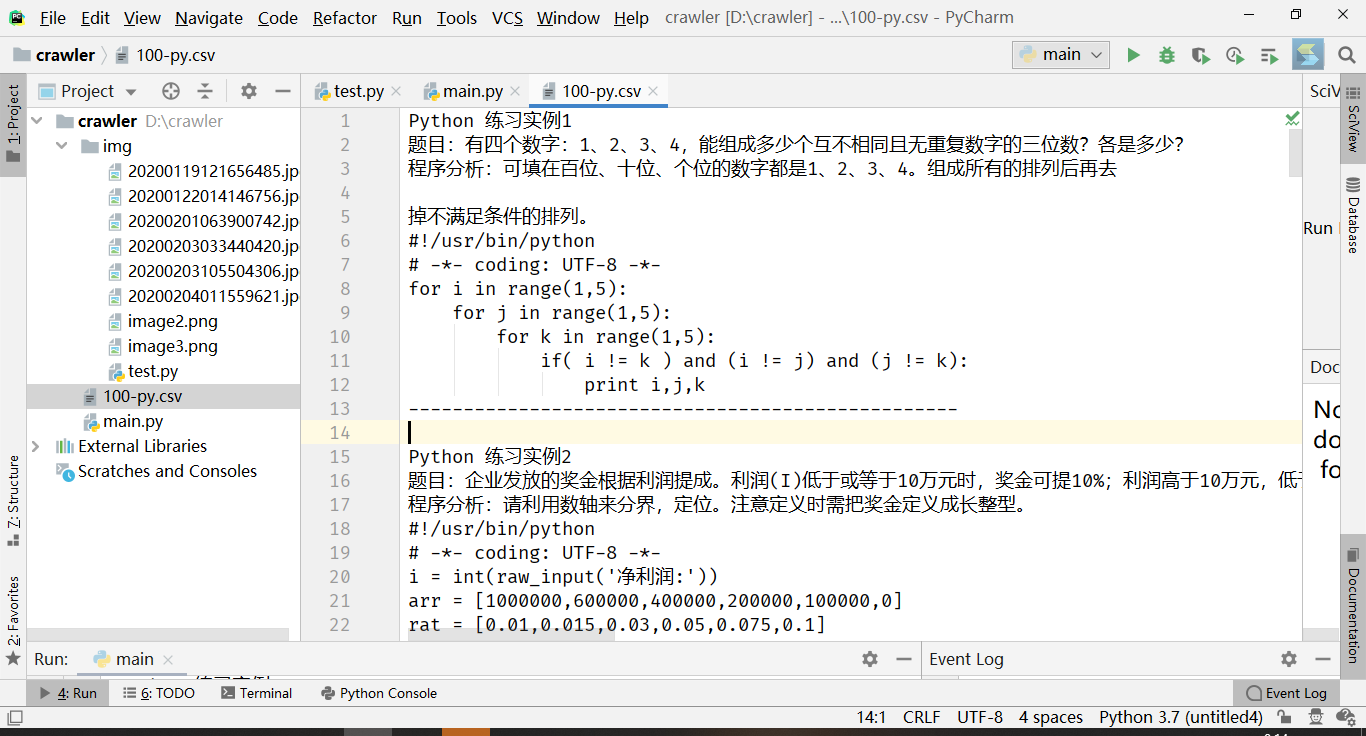

- 爬取结果